Aurich Lawson / Getty Images

Matrix multiplication is at the heart of lots of equipment mastering breakthroughs, and it just obtained faster—twice. Previous week, DeepMind introduced it identified a a lot more economical way to accomplish matrix multiplication, conquering a 50-calendar year-aged document. This week, two Austrian researchers at Johannes Kepler College Linz declare they have bested that new document by a person move.

Matrix multiplication, which includes multiplying two rectangular arrays of numbers, is frequently observed at the heart of speech recognition, picture recognition, smartphone image processing, compression, and producing computer graphics. Graphics processing models (GPUs) are specially great at undertaking matrix multiplication due to their massively parallel nature. They can dice a massive matrix math challenge into many parts and assault components of it at the same time with a distinctive algorithm.

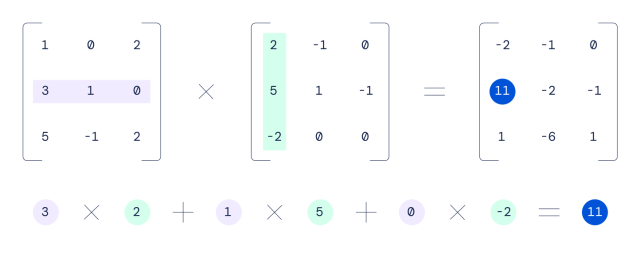

In 1969, a German mathematician named Volker Strassen discovered the previous-best algorithm for multiplying 4×4 matrices, which lowers the quantity of methods important to execute a matrix calculation. For illustration, multiplying two 4×4 matrices with each other utilizing a common schoolroom process would take 64 multiplications, although Strassen’s algorithm can complete the exact feat in 49 multiplications.

DeepMind

Applying a neural network termed AlphaTensor, DeepMind identified a way to lessen that depend to 47 multiplications, and its researchers revealed a paper about the accomplishment in Mother nature final week.

Heading from 49 steps to 47 will not audio like considerably, but when you consider how many trillions of matrix calculations acquire position in a GPU just about every day, even incremental enhancements can translate into substantial performance gains, enabling AI applications to operate far more rapidly on existing hardware.

When math is just a game, AI wins

AlphaTensor is a descendant of AlphaGo (which bested planet-champion Go players in 2017) and AlphaZero, which tackled chess and shogi. DeepMind calls AlphaTensor “the “1st AI system for getting novel, successful and provably correct algorithms for basic duties these types of as matrix multiplication.”

To uncover additional effective matrix math algorithms, DeepMind established up the issue like a single-player video game. The business wrote about the system in more detail in a web site submit very last week:

In this game, the board is a 3-dimensional tensor (array of quantities), capturing how far from accurate the present-day algorithm is. By way of a established of authorized moves, corresponding to algorithm guidelines, the player makes an attempt to modify the tensor and zero out its entries. When the participant manages to do so, this final results in a provably accurate matrix multiplication algorithm for any pair of matrices, and its performance is captured by the selection of techniques taken to zero out the tensor.

DeepMind then trained AlphaTensor applying reinforcement studying to perform this fictional math game—similar to how AlphaGo realized to engage in Go—and it slowly improved more than time. Ultimately, it rediscovered Strassen’s get the job done and those of other human mathematicians, then it surpassed them, according to DeepMind.

In a much more sophisticated case in point, AlphaTensor found a new way to complete 5×5 matrix multiplication in 96 actions (compared to 98 for the older system). This week, Manuel Kauers and Jakob Moosbauer of Johannes Kepler University in Linz, Austria, published a paper saying they have diminished that rely by a person, down to 95 multiplications. It can be no coincidence that this seemingly history-breaking new algorithm came so speedily mainly because it developed off of DeepMind’s perform. In their paper, Kauers and Moosbauer compose, “This resolution was received from the plan of [DeepMind’s researchers] by implementing a sequence of transformations main to a scheme from which one particular multiplication could be eliminated.”

Tech development builds off itself, and with AI now looking for new algorithms, it truly is achievable that other longstanding math data could drop quickly. Very similar to how computer system-aided layout (CAD) permitted for the advancement of a lot more advanced and a lot quicker computer systems, AI may aid human engineers speed up its personal rollout.